Neural Networks coupled with Genetic Algorithms can really accelerate the learning process to solve a certain problem.

All the big companies are now using Neural Nets(NNs) and Genetic Algorithms(GAs) to help their NNs to learn better and more efficiently. In this article, I will go over the pros and cons of coupling NNs and GAs and share a few thoughts of my own. I will also, describe the basic algorithm used in this process.

I hope this article teaches you something new! Let’s begin.

Genetic Algorithms were very popular before NNs. Since, NNs required a lot of data, and GAs didn’t. GAs were used mostly to simulate environments and behaviors of entities in a population. They were mostly used to learn the path to a problem which we knew the answer to.

GAs are still used today but Machine Learning(ML) has mostly taken over.

How They Work

GAs according to Wikipedia:

In computer science and operations research, a genetic algorithm (GA) is a metaheuristic inspired by the process of natural selection that belongs to the larger class of evolutionary algorithms (EA). Genetic algorithms are commonly used to generate high-quality solutions to optimization and search problems by relying on bio-inspired operators such as mutation, crossover and selection.

If this still doesn’t sink in, then I’m sure Daniel Shiffman’s playlist of GAs will help. It helped me learn how GAs work and Shiffman’s videos are really good in general. Although I do recommend speeding them up.

The Problem with NNs

NNs have helped us solve so many problems. But there’s a huge problem that they still have. Hyperparameters! These are the only values that can not be learned… Until now.

Note: Hyper-parameters are values required by the NN to perform properly, given a problem.

We can use GAs to learn the best hyper-parameters for a NN! This is absolutely awesome!

Now, we don’t have to worry about “knowing the right hyperparameters” since, they can be learned using a GA. Also, we can use this to learn the parameter’s(weights) of a NN as well.

Since, in a GA, the entities learn the optimum genome for the specified problem, here, the genome of each NN will be its set of hyper-parameters.

Solving the Problem

To solve the hyper-parameter problem, we need to do the following:

- Create a population of several NNs.

- Assign random(in a range) hyper-parameters to all the NNs.

-

Perform the following for some amount of iterations:

- Train all the NNs simultaneously or one by one.

- After all of them are done training, calculate their training cost.

- Calculate the “fitness”(how well it did in that iteration) of each NN based on its cost. Fitness will be used to increase the chances of a NN “reproducing.” Higher the fitness, higher is its chance of reproducing. However, the best NN will have the lowest cost. If we set the fitness of that NN equal to its cost, then the best NN will have the least chance of being picked for reproduction. We can instead assign the fitness, to the reciprocal of the cost. Therefore, the lowest cost, will have the highest fitness. There are ways to assign better values to the fitness but that’s beyond the scope of this article.

- Find the maximum fitness in the population(required for step 5, can be disregarded depending on the implementation of step 5).

- Pick 2 NNs based on a probability system regarding their fitness. I am omitting the explanation of this, since this might seem too complicated to some. Here’s a video, explaining the topic.

- Crossover the genes of the 2 NNs. This will create a “child” NN. This NN should have some properties of the first NN and some of the second. This process has many different implementations as well.

- Mutate the genes of the child. Mutating is required to maintain some amount of randomness in the GA.

- Perform steps 5 — 7 for the amount of NNs in the population. You should store the “children” created, in a new population and assign the new population to the variable containing the old population.

Note: You should create a class of the NN whose parameters you’d like to learn. The population should then initially, contain several instances of that same NN class. Also, everything written above can be learned from Shiffman’s playlist.

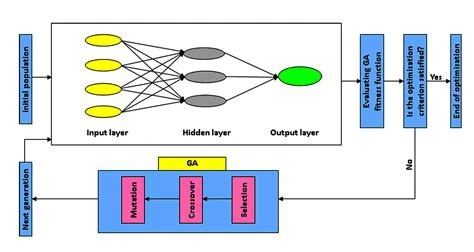

Performing all the steps above, at the end of the latest generation, your algorithm will have found the population containing a NN with the optimum hyper-parameters. It will be the fittest NN of all in the population. The picture shown below attempts to explain the process.

Iterative process of finding hyper-parameters using GAs

Another Problem

This being a good solution to learning your hyper-parameters, it does come with its own problems. The 2 most prominent being the problem of computational resources and time.

Training many NNs simultaneously or one by one, several times requires a lot of time and computational resources. This limits the implementation of this solution to only the people who are willing to put in the money and buy a lot of processing power.

This is also the reason why it’s widely used by large companies.

An Example

A little less than a year ago, OpenAIs Dota 2 bot beat a pro Dota 2 player(Article and YouTube video). What took the player years to learn and master, took the bot only a few weeks. In a video I saw on YouTube, an OpenAI Engineer explained how they trained the bot.

They used a GA to train their bot. Since, they had the processing power necessary, they were able to run multiple instances of the Dota 2 simultaneously with each having an instance of the bot playing the game. It took them 2 weeks to teach the bot with this process.

Just imagine how much processing power that must have required.

Conclusion

In my opinion, GAs are good to teach a NN but they will not be my first choice. Instead, I will try to look for better ways to learn the hyper-parameters of a NN. If there are any, that is.

However, if in the future I get access to a lot of processing power, I will be sure to try this method out.

That’s it, Hope you learned something new!